The $10,000 Mistake: When the Boss Yanked the Server Plug Mid-Update

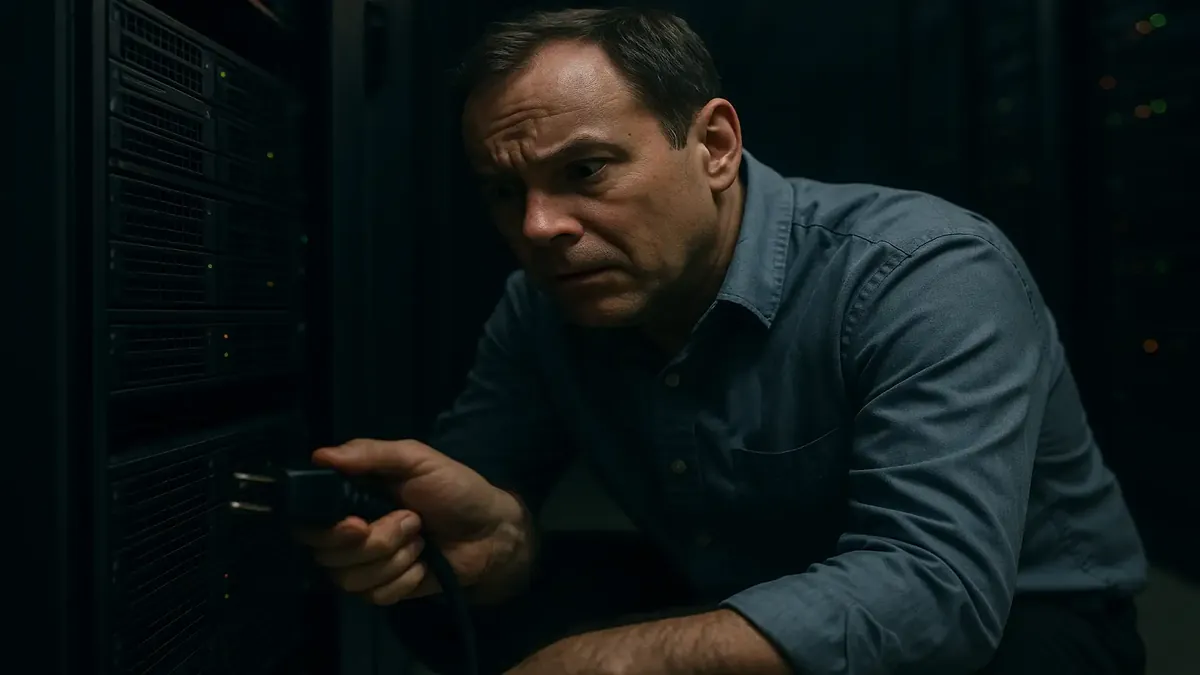

Picture this: It’s 3:00 AM on a Thursday in 2011. The world is quiet, the city is sleeping, and in a dimly lit office, Mark—the owner of a bustling finance company—decides it’s the perfect time to “fix” the email server. He’s got his coffee, his confidence, and absolutely zero patience for IT protocols. What could possibly go wrong?

Let’s just say, if you’ve ever wondered what happens when someone literally pulls the plug on a server during critical updates, this is the cautionary tale you need. Buckle up, because this ride gets bumpy, and there are lessons here for bosses, techies, and anyone who’s ever thought, “How hard can it be?”

Mark vs. The Exchange Server: Who Will Win?

Our story begins with Mark, a classic example of the “I know better than IT” boss. He owned a successful finance firm, worked odd hours, and stubbornly clung to physical servers like they were golden eggs. Virtualization? Not on his watch. Trust the experts? Not when Mark’s in charge.

Enter the Exchange 2010 server, humming along on Windows Server 2008 R2. Like all good sysadmins, u/OinkyConfidence (our Reddit storyteller) had set up a monthly maintenance window for updates—3AM, when the office was as empty as Mark’s understanding of IT best practices.

But Mark, ever the early riser, was in before the birds. When his Outlook disconnected (because, you know, the server was busy updating), he didn’t call IT. He didn’t check the schedule. He didn’t even Google it. Instead, Mark went full caveman: he pulled the power cords, waited a few seconds, and plugged them back in.

The Aftermath: When Disaster Strikes Before Sunrise

Fast forward to 6AM. Our hero, u/OinkyConfidence, is several states away at a conference, probably enjoying a well-deserved break. The phone rings.

“I can’t get into email,” Mark says.

The conversation that follows could be a scene from any IT horror film. The moment Mark admits he yanked the plugs at 3:15AM—right in the middle of updates—you can practically hear the sysadmin’s soul leave his body.

The rest of the morning is a blur of remote restores, panic, and missed conference sessions. The Exchange database is corrupted beyond repair, forcing a rollback to the previous night’s backup. Some emails vanish, never to be seen again. The entire office pays the price for Mark’s midnight meddling.

And in a twist that would make any IT pro cheer, Mark is billed emergency rates for his blunder. Sometimes karma comes with an itemized invoice.

Lessons from the Server Room

What can we learn from Mark’s $10,000 mistake (and yes, data loss, lost productivity, and emergency IT time add up fast)?

-

Don’t Touch What You Don’t Understand

Servers aren’t toasters. You can’t just “unplug and replug” them, especially during updates. Data corruption is real, and recovery isn’t always possible. -

Trust Your IT Team

When your tech folks set maintenance windows, it’s for a reason. Ignoring their advice isn’t just disrespectful—it’s expensive. -

Virtualization Isn’t a Fad

Mark’s refusal to modernize left his company with an aging, single-point-of-failure system. Virtual environments offer snapshots, quick restores, and fewer Mark-induced disasters. -

Emergency Rates Are Real

IT emergencies cost more. If you break it, you (or your company) will pay for the privilege of having it fixed at 6AM—especially if you ignored all prior advice.

Epilogue: The Server Survives… Until It Doesn’t

In a final twist, Mark’s business didn’t survive the COVID-19 pandemic. They liquidated, and, according to our storyteller, probably clung to that very same Exchange 2010 server until the bitter end. By then, the IT provider had long since fired them as a client—a happy ending for everyone but Mark.

Don’t Be a Mark

So, next time you’re tempted to “just reboot” the server, remember Mark: the man, the myth, the IT department’s worst nightmare. If you ever wonder why sysadmins are so grumpy (or why they have so many gray hairs), stories like this are the reason.

Got your own tales of tech support terror? Share them below! And if you’re a boss, here’s a tip: When in doubt, call IT. Trust us, it’ll save everyone a headache—and maybe a few thousand dollars.

What’s the wildest thing you’ve seen in the server room? Drop your stories in the comments!

Original Reddit Post: Mark pulled the plug on the Exchange server during updates